I really wish that I was born early so I’ve could witness the early years of Linux. What was it like being there when a kernel was released that would power multiple OSes and, best of all, for free?

I want know about everything: software, hardware, games, early community, etc.

Removed by mod

Remember the slow internet had to wait overnight for 40 megabyte game and finally finding out it didn’t work.

Removed by mod

Half of it because random disconnect happened in the middle and download did not resume.

In glorious 256 colors !

Up all night, and all you got to see was a boob

Sometimes a boob who spent the previous night compiling a custom webcam driver. :(

Remember the Internet at these speeds, Moss? Up all night and you’d see three women.

jad

deleted by creator

Remember when packages like RPM were first introduced, and it was like, “cool, I don’t have to compile everything!” Then you were introduced to Red Hat’s version of DLL-Hell when the RPM couldn’t find some obsure library! Before YUM, rpmfind.net was sooo useful!

I still use pkgs.org pretty frequently when I need to find versions of packages and their dependencies across different distros and versions of distros. I had to use that to sneakernet something to fix a system just this past week.

Oh sites like that are absolutely still useful! Especially for older distros or when you need a specific version that you can’t find for whatever reason.

Removed by mod

Poor Annie.

Alrighty, old Linux user from the earliest of days.

It was fun, really great to have one-on-one with Linus when Lilo gave issues with the graphic card and the screen kept blank during booting.

It was new, few fellow students where interested, but the few that did, all have serious jobs in IT right know.

Probably the mindset and the drive to test out new stuff, combined with the power Linux gave.

OMG… BOFH! I need to go find those stories now :-)

fortunes-bofh-excuseson DebianFor that, I’ll spin up a copy…

Holly crap I got lazy. Perfect ;-)

The BOFH and his PFY are still helping their users…

Didn’t expect to see a legend just scrolling here. Thank you for your contributions to computer science.

Clumsy. Manual. No multimedia support really. Compiling everything on 486 machines took hours.

Can’t say I look back fondly on it.

BeOS community was fucking awesome though. That felt like the cutting edge at the time.

BeOS and NetBSD was were it was at for sure!!

deleted by creator

Yeah, I’ve tried it out. It’s just years behind any Linux desktop right now though. The entire point of BeOS was to be a multimedia powerhouse, and it was. Everything else has surpassed it at this point though.

I desperately wanted one of those first BeBoxes or whatever they were called. And one of those little SGI toasters… I even tried to compile SGI’s 3D file manager (demo) from Jurassic Park.

Herp derp… where can I download an OpenGL from… it keeps saying I can’t build it without one 🤤

I can’t remember much about it now, but I remember really wanting BeOS. I managed to get it installed once, but couldn’t get the internet working, so ended up uninstalling it.

It wasn’t too early, maybe 1997.

I was like 12 or so and I had just installed Linux.

I figured out, from the book I was working with, how to get my windows partition to automaticallyount at boot. Awesome!

I had not been able to figure out how to start “x” though.

So I rebooted into Windows, for on EFnet #linux, and asked around.

Got a command, wrote it down on a slip of paper, and rebooted into Linux.

I should mention, I also hadn’t figured out about privileges, or at least why you wouldn’t want to run around as root.

Anyway, I started typing in the command that I wrote down:

rm -rf /.I don’t have to tell you all, that is not the correct command. The correct command was

startx.After I figured it was taking way too long, I decided to look up what the command does, and then immediately shut down the system.

It was far too late.

My pranks were less destructive …

/ctcp nick +++ath0+++… it was amazing how often that worked. 🤣PRESS ALT+F4 for ops! 😂

OMG… the showmanship…

Someone-being-bratty-on-IRC: […] Me: We’re going to take away your internet access if you don’t behave. Bratty: Fuck you! You can’t do tha 5 minutes later… Bratty: How did you do that???Thats a new one on me. What did that do if I may ask? Best I have been able to figure out is that it’s probably IRC related but that’s it.

+++ath0is a command that tells a dial up modem to disconnect. I’ve never seen it used in IRC this way, but my guess is that the modem would see this coming from the computer and disconnect.This was back in the days when everything was unencrypted.

Yes, and encryption had nothing to do with it (though I suppose it would have prevented it in this case).

A properly configured modem would ignore this coming from the Internet side, or escape the characters so that they didn’t form that string.

Encryption would prevent it - that’s what I meant :)

I think the trick is to convince someone to send that string, so the modem sees it coming from the computer. Similar to tricking someone into pressing Alt+F4, or Ctrl+Alt+Del twice on Windows 9x (instantly reboots without prompting).

encryption would prevent the modem from seeing it when someone sends it, but such a short string will inevitably appear once in a while in ciphertext too. so, it would actually make it disconnect at random times instead :)

(edit: actually at seven bytes i guess it would only occur once in every 72PB on average…)

Explained nicely here: https://everything2.com/title/%252B%252B%252BATH0

Wow, a post from 2001 that’s still online today. You don’t see that often any more!

That’s terrible! They helped me fix my system when I decided I was fancy enough to try building a new version of gcc and go off-script a bit.

IIRC I deleted library.so rather that overwriting it. If I hadn’t been running IRC on another terminal already I would have been done for.

You spent a few evenings downloading a hundred or so 1.44MB floppy imges over a 56kbps modem. You then booted the installer off one of those floppies, selected what software you wanted installed and started feeding your machine the stack of floppies one by one.

Once that was complete you needed to install the Linux boot loader “LiLo” to allow you the boot it (or your other OS) at power on.

All of that would get you to the point where you had a text mode login prompt. To get anything more you needed to gather together a lot of detailed information about your hardware and start configuring software to tell it about it. For example, to get XFree86 running you needed to know

- what graphics chip you had

- how much memory it had

- which clock generator it used

- which RAMDAC was on the board

- what video timings your monitor supported

- the polarity of the sync signals for each graphics mode

This level of detail was needed with every little thing

- how many heads and cylinders do your hard drives have

- which ports and irqs did your soundcard use

- was it sound blaster compatible or some other protocol

- what speeds did your modem support

- does it need any special setup codes

- what protocol did your ISP use over the phone line

- what was the procedure to setup an tear down a network link over it

The advent of PCI and USB made things a lot better. Now things were discoverable, and software could auto-configure itself a lot of the time because there were standard ways to ask for information about what was connected.

Jesus Christ. Glad I got to ride of the backs of the giants before me. Live CD’s were so much fun back around 2001.

I’ve put on a bit of weight since then, but I wouldn’t say that I’m giant.

You brought back traumatic memories I had successfully repressed.

All my homies who were into it were like “everything is free you just have to compile it yourself”

And I was like “sounds good but I cannot”

Then all the cool distros got mature and feature laden.

If you were a competent computer scientist it was rad.

If you were a dummy like me who just wanted to play star craft and doom you wasted a lot of time and ended up reinstalling windows.

I learned how to make a dual boot machine first.

My friend wanted to get me to install it, but he had a 2nd machine to run Windows on. So we figured out how to dual boot.

And then we learned how to fix windows boot issues 😮💨

We mostly did it for the challenge. Those Linux Magazine CDs with new distros and software were a monthly challenge of “How can I install this and also not destroy my ability to play Diablo?”

I definitely have lost at least one install to getting stuck in vim, flailing the keyboard and writing garbage data into a critical config file before rebooting.

Modern Linux is amazing in comparison, you can use it for essentially any task and it still has a capacity for customization that is astonishing.

The early days were interesting if you like getting lost in the terminal and figuring things out without a search engine. Lots of trial and error, finding documentation, reading documentation, etc.

It was interesting, but be glad you have access to modern Linux. There’s more to explore, better documentation, and the capabilities that you can pull in are still astonishing.

I definitely have lost at least one install to getting stuck in vim, flailing the keyboard and writing garbage data into a critical config file before rebooting.

I love modern cli Linux distros.

I am about to plunge into desktop Linux this year.

Linux phone, pc and tablets only for me from now on

Death to oligarch business!

Linux phone

What do you use? Is it your daily driver?

I have not chosen yet.

I am between purism Librem 5 (expensive) and pinephone (cheap)

I am leaning to pinephone since it’s so cheap if I hate it it won’t ruin me

Have you considered SailfishOS?

Personally I recommend getting a Sony Xperia and installing it yourself.

SFOS has been my daily driver for 5 years now.

I paid €49 for the license, so it’s a bargain right now at 24.90, and my latest device, an xperia x10ii, cost just €60.

Let me know if you get your Pinephone working well enough to daily-drive, 'cause I’ve got one sitting around collecting dust.

Which linux phone is practical?

Almost all of them lack good hardware and feel overpriced.

I use SailfishOS on a Sony Xperia. 50€ for the SFOS license, 60€ for the phone.

Which iPhone isn’t overpriced lol

I like librem 5 for the features but it is expensive.

I like pinephone for the price.

“Please insert Slackware disk Set A disk 3”

We had multiple fireproof boxes loaded with floppy backups…

If you wanted to run Unix, your main choices were workstations (Sun, Silicon Graphics, Apollo, IBM RS/6000), or servers (DEC, IBM) They all ran different flavors of BSD or System-V unix and weren’t compatible with each other. Third-party software packages had to be ported and compiled for each one.

On x86 machines, you mainly had commercial SCO, Xenix, and Novell’s UnixWare. Their main advantage was that they ran on slightly cheaper hardware (< $10K, instead of $30-50K), but they only worked on very specifically configured hardware.

Then along came Minix, which showed a clean non-AT&T version of Unix was doable. It was 16-bit, though, and mainly ended up as a learning tool. But it really goosed the idea of an open-source OS not beholden to System V. AT&T had sued BSD which scared off a lot of startup adoption and limited Unix to those with deep pockets. Once AT&T lost the case, things opened up.

Shortly after that Linux came out. It ran on 32-bit 386es, was a clean-room build, and fully open source, so AT&T couldn’t lay claim to it. FSF was also working on their own open-source version of unix called GNU Hurd, but Linux caught fire and that was that.

The thing about running on PCs was that there were so many variations on hardware (disk controllers, display cards, sound cards, networking boards, even serial interfaces).

Windows was trying to corral all this crazy variety into a uniform driver interface, but you still needed a custom driver, delivered on a floppy, that you had to install after mounting the board. And if the driver didn’t match your DOS or Windows OS version, tough luck.

Along came Linux, eventually having a way to support pluggable device drivers. I remember having to rebuild the OS from scratch with every little change. Eventually, a lot of settings moved into config files instead of #defines (which would require a rebuild). And once there was dynamic library loading, you didn’t even have to reboot to update drivers.

The number of people who would write and post up device drivers just exploded, so you could put together a decent machine with cheaper, commodity components. Some enlightened hardware vendors started releasing with both Windows and Linux drivers (I had friends who made a good living writing those Linux drivers).

Later, with Apache web server and databases like MySql and Postgres, Linux started getting adopted in data centers. But on the desktop, it was mostly for people comfortable in terminal. X was ported, but it wasn’t until RedHat came around that I remember doing much with UIs. And those looked pretty janky compared to what you saw on NeXTStep or SGI.

Eventually, people got Linux working on brand name hardware like Dell and HPs, so you didn’t have to learn how to assemble PCs from scratch. But Microsoft tied these vendors so if you bought their hardware, you also had to pay for a copy of Windows, even if you didn’t want to run it. It took a government case against Microsoft before hardware makers were allowed to offer systems with Linux preloaded and without the Windows tax. That’s when things really took off.

It’s been amazing watching things grow, and software like LibreOffice, Wayland, and SNAP help move things into the mainstream. If it wasn’t for Linux virtualization, we wouldn’t have cloud computing. And now, with Steam Deck, you have a new generation of people learning about Linux.

PS, this is all from memory. If I got any of it wrong, hopefully somebody will correct it.

That’s great bit of history

It may be useful for people reading if you could add headers about when each decade starts, since you have many of them there

Before modularized kernels became the standard I was constantly rerunning “make menuconfig” and recompiling to try different options, or more likely adding something critical back in :-D

I totally forgot about the shift to modules. What an upgrade!

Contrary to other OSes, the information about it was mainly on the internet, no books or magazines. With only one computer at most homes, and no other internet-connected devices, that posed a problem when something didn’t work.

It took me weeks to write a working X11 config on my computer, finding all the hsync/vsync values that worked by rebooting back and forth. And the result was very underwhelming, just a terminal in an immovable window. I think I figured out how to install a window manager but lost all patience before getting to a working DE. Days and days of fiddling and learning.

Lol! 'Member Afterstep?

The desktop stretched across 4 screens was enough to hook me for life.

Xeyes… so many terminals… the artwork was artwork… wtf is transparency?! 😁 It was an amazing time to be a geek.

I didn’t get that far. And I only had an Amiga at that time, which made things more difficult to set up. I wonder how fluent transparency would be with AGA, haha. My next attempt was woth a PC around 2003 with KDE3 and it got me hooked.

Speaking of books, my only experience with Linux in the 90s was seeing the Red Hat books. I don’t know anyone who actually made it work.

I haven’t seen these until much later

You got it from a friend on a pile of slackware and floppies labeled various letters. It felt amazing and fresh, everything you could need was just a floppy away.

Then we got Gentoo and suddenly it was fun to wait 4 days to compile your kernel.

I remember my first Slackware installation from a pile of floppy disks!

I also remember that nothing worked after the installation, I had to figure out how to roll my own kernel and compile all the drivers. Kids today have it too easy.

shakes fist Now get offa ma lawn!

I tried compiling gentoo a bit later, upgraded from windows 95. Could never get to a login screen, I quit, and started using Linux later when it was easier to install

I remember I had over one hundred floppies to install it all. And those were just for the stuff I was interested in. This was circa 1996. I bought Red Hat 5.0 a year or so later. It came on 4 CD-ROM’s and was cheaper than that pile of floppies had been.

Is Slackware just pirated software?

No, it’s one of the first Linux distributions

Thanks! The Wikipedia was an interesting read. It seems it was closed source? That’s an interesting Linux method

Slackware is still around, no past tense. What makes you think it was closed source?

There is no formal issue tracking system and no official procedure to become a code contributor or developer. The project does not maintain a public code repository. Bug reports and contributions, while being essential to the project, are managed in an informal way. All the final decisions about what is going to be included in a Slackware release strictly remain with Slackware’s benevolent dictator for life, Patrick Volkerding.

That doesn’t make the source code proprietary or non-open, it just means it isn’t a community driven project.

It is a community-driven project, but there is no structured way to join.

You can become a member of the community when Patrick Volkerding or one of the lead devs ask you.

I’ve been in contact with them for a while and ultimately decided against contributing.

They acted too much like old men when you step on their lawn, and I don’t see the point in this distro anymore, apart from it being a blast from the past.

Literally everything it does is done better by others now.

That’s just the way things were done back then. Slack has been around long enough that that’s just the way it is.

Looks pretty open source to me https://mirrors.slackware.com/slackware/slackware-current/source/

A real pain in the ass. It was still worth it to use for the experience, especially if you had an actual reason to use it. Other than that it was just an exercise in futility most of the time…and I think that’s why we loved it. It was still kinda new. Interesting. And it didn’t spoon feed you. Was quite exhilarating.

Constantly trying to remember obscure bullshit fucking commands

I started using Linux right in the late 90’s. The small things I recall that might be amusing.

- The installation process was easier than installing Arch (before Arch got an installer)

- I don’t recall doing any regular updates after things were working except for when a new major release came out.

- You needed to buy a modem to get online since none of the “winmodems” ever worked.

- Dependency hell was real. When you were trying to install an RPM from Fresh Meat and then it would fail with all the missing libraries.

- GNOME and KDE felt sincerely bloated. They seemed to always run painfully slow on modern computers. Moving a lot of people to Window Managers.

- it was hard to have a good web browser. Before Firefox came out you struggled along with Netscape. I recall having to use a statically compiled ancient (even for the time) version of Netscape as that was the only thing available at the time for OpenBSD.

- Configuring XFree86 (pre-cursor to X.org) was excruciating. I think I still have an old book that cautioned if you configured your refresh rates and monitor settings incorrectly your monitor could catch on fire.

- As a follow on to the last statement. I once went about 6 months without any sort of GUI because I couldn’t get X working correctly.

- Before PulseAudio you’d have to go into every application that used sound and pick from a giant drop down list of your current sound card drivers (ALSA and OSS) combined with whatever mixer you were using. You’d hope the combo you were using was supported.

- Everyone cheered when you no longer had to fight to get flash working to get a decent web browsing experience.

<I think I still have an old book that cautioned if you configured your refresh rates and monitor settings incorrectly your monitor could catch on fire.> Are you telling me that one dev for X.org could set someone’s monitor on fire by fucking with four lines of code?

Jesus Christ, thanks for that, I didn’t need to sleep tonight.

Monitors don’t work like that anymore. The ones that could catch on fire are pretty much all in the landfills by now.

I don’t recall doing any regular updates

You needed to buy a modem to get online

If you stay offline, you don’t need upgrading to prevent virus or hacking. That’s the norm in the good old days.

deleted by creator

And you needed to find out the scanlines of your monitor before X would even display anything, and then that was a black and white grid. Then you needed to spent another day or two getting a window manager working.

Oh god, was there even a maximize button so you could maximize your windows?

deleted by creator

What did maximum even mean when you have a “virtual desktop” that was 4x times the size of your actual display. Because that is the kind of nonsense we used to do on Linux (because you you could and the other guys could not).

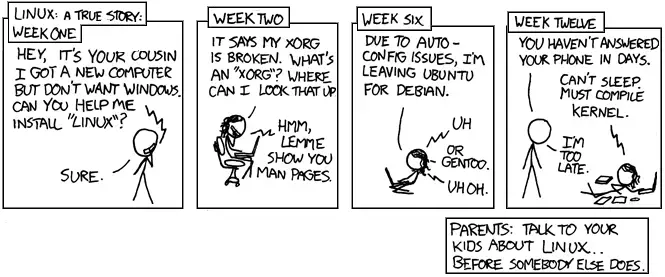

Relevant xkcd’s

deleted by creator

Do you have support for smooth full-screen Flash video yet?

I don’t remember if that ever got fixed. Even if it did, Flash was already on its way out by that point.

Some technologies are better skipped, ignored until they collapse under their own annoyance.